A client recently shared an article from Supply Chain Management outlining five leading Supply Chain trends. The fifth trend was the increasing importance of “Big Data.” And while not spelled out specifically by the author, the first four trends are also all heavily data-dependent. It’s hard to argue: “Big Data” is all the rage.

But is this right?

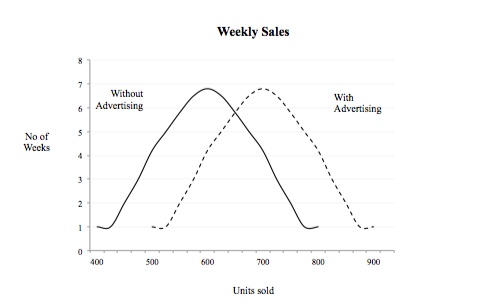

No, I’m not seeking to revive the debate over intuition and analysis (Blink vs. Freaknomics, anyone?). My point is that the issue isn’t one of BIG data, but rather BETTER data. We don’t need data that is more plentiful, we need data that is more revealing. We don’t need more hay, we need the needle within it. The value isn’t in more data, it’s in better questions upfront that define the data we need, and it’s in more revealing analysis of that data – whether that data is “big” or “small.” This is what leads to better insight, better decision making, and competitive advantage.

As we seek to better “connect the dots” in our work environments, we need to remember: the data are the dots; the analyses are the connecting lines. I’d rather have fewer dots that are meaningfully connected, than more dots that aren’t.